This is something I haven’t seen in other Haskell IDEs before but which to me would be useful:

Context sensitive lexing, as in the lexer wil treat certain tokens differently based on information defined globally, e.g LANGUAGE Pragmas.

But first a quick recap of how lexing is done in visual haskell 2010:

- The IDE will ask me to color text one line at a time

- Everytime I want to color a line I make a call to HsLexer.dll which is a binding to the GHC Api, which calls the GHC lexer directly.

- Multiline comments are handles in a local state and are never passed to the lexer because since I’m lexing one line at a time, I won’t be able to find the boundaries of the comment blocks like that, so instead I just keep track of the comment tokens {- and –} and identify blocks using a local algorithm that mimics the matching done by GHC.

- Using that I was always able to color GHC Pragmas a different color than normal comments, the reason for this is that they have special meaning, so I’m depicting them as such.

The original code for lexing on the Haskell side was

— @@ Export

— | perform lexical analysis on the input string.

lexSourceString :: String -> IO (StatelessParseResult [Located Token])

lexSourceString source =

do

buffer <- stringToStringBuffer source

let srcLoc = mkSrcLoc (mkFastString "internal:string") 1 1

let dynFlag = defaultDynFlags

let result = lexTokenStream buffer srcLoc dynFlag

return $ convert result

pretty straight forward, I won’t really be explaining what everything does here, but what’s important is that we need to somehow add the LANGUAGE pragma entries into the dynFlag value above.

To that end, I created a new function

— @@ Export

— | perform lexical analysis on the input string and taking in a list of extensions to use in a newline seperated format

lexSourceStringWithExt :: String -> String -> IO (StatelessParseResult [Located Token])

lexSourceStringWithExt source exts =

do

buffer <- stringToStringBuffer source

let srcLoc = mkSrcLoc (mkFastString "internal:string") 1 1

let dynFlag = defaultDynFlags

let flagx = flags dynFlag

let result = lexTokenStream buffer srcLoc (dynFlag { flags = flagx ++ configureFlags (lines exts) })

return $ convert result

which gets the list of Pragmas to enable in a newline \n delimited format. The reason for this is that WinDll currently does not support Lists marshalling properly. It’ll be there in the final version at which point I would have rewritten these parts as well. But until then this would suffice.

the function seen above

configureFlags :: [String] -> [DynFlag]

is used to convert from the list of strings to a list of recognized DynFlag that effect lexing.

Now on to the C# side, Information I already had was the location of the multi comment sections, so all I needed to do was, on any change filter out those sections which I already know to be a Pragma (I know this because I color them differently remember)

But since the code that tracks sections is generic I did not want to hardcode this, so instead I created the following event and abstract methods

public delegate void UpdateDirtySections(object sender, Entry[] sections);

public event UpdateDirtySections DirtyChange;

/// <summary>

/// Raise the dirty section events by filtering the list with dirty spans to reflect

/// only those spans that are not the DEFAULT span

/// </summary>

protected abstract void notifyDirty();

/// <summary>

/// A redirect code for raising the internal event

/// </summary>

/// <param name="list"></param>

internal void raiseNotifyDirty(Entry[] list)

{

if (DirtyChange != null)

DirtyChange(this, list);

}

and the specific implementation of notifyDirty for the CommentTracker is

protected override void notifyDirty()

{

Entry[] sections = (Entry[])list.Where(x => x.isClosed && !(x.tag is CommentTag)).ToArray();

base.raiseNotifyDirty(sections);

}

Meaning we only want those entries that are Not the normal CommentTag and that are closed, i.e. having both the start and end values filled in. (the comment tracking algorithm tracks also unclosed comment blocks, It needs to in order to do proper matching as comments get broken or introduced)

The only thing left now is to make subscribe to this event from the Tagger that produces syntax highlighting and react to it. My specific implementation does two things, It keeps track of the current collection of pragmas and the previous collection.

then it makes a call to checkNewHLE to see whether we have introduces or removed a valid syntax pragma. If this is the case, it asks for the entire file to be re-colored.

This call to checkNewHLE is important, since when the user is modifying an already existing pragma tag,

for instance adding TypeFamilies into the pragmas {-# LANGUAGE TemplateHaskell #-} we get notified for every keypress the user makes, but untill the whole keyword TypeFamilies has been types there’s no point in re-coloring the whole file.

The result of this can be seen below and I find it very cool to be frank 😀

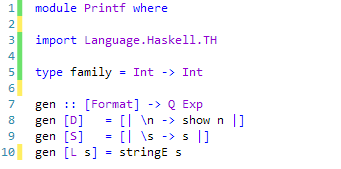

What it looks like with no pragmas

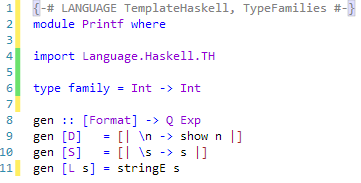

now look at what happens when we enable TemplateHaskell and TypeFamilies

notice how with the extensions enabled “family” and “[|” , “|]” now behave like different keywords, this should be usefull to notify the programmer when he’s using certain features. For instance, with TypeFamilies enabled line 6 would no longer be valid because “family” is now a keyword.