I’ve recently been looking into how to make the type checking part faster. The type checker part consists of two parts just like most of the code in Visual Haskell 2010, A Haskell side and a C# side.

This post is about optimizations on the C# side.

the setup currently is as follows:

Every 500ms after the user has finished typing, a call is made to typecheck() which does a few things,

- Finds the current module information (cached)

- Generates a temporary file which has the same content of the In-Memory buffer

- Makes a call to the Haskell world

- Interprets the results

The part I thought I could optimize is that I didn’t want to have to write the buffer out to a file only to have GHC read it back in. Under normal circumstances the files will probably be in disk cache and not even written out to begin with, but we would still have to make a couple of kernel calls (about 6).

It’s not like the new approach wouldn’t have drawbacks, for one thing you’d have the serialization drawback, you suddenly have to serialize large strings from and to the Haskell world, but above that the GHC Api doesn’t even support type checking of In-Memory buffers.

So the first part is to edit the GHC Api and add this functionality.

Modifying GHC

I won’t explain this in detail, but I’ll just outline the “gist” of it.

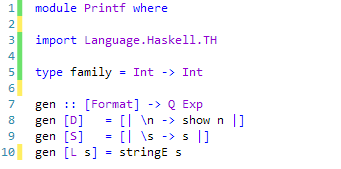

Whenever you want to tell GHC want to inspect, It does so by inspecting all the “Targets” you’ve specified. An example of this would be

target <- guessTarget file Nothing

addTarget target

which means, we first ask it to guess what the string is (a module, or filename) and it’ll construct the appropriate Target for us. In order to support In-Memory buffers I added a new TargetId

| TargetVirtualFile StringBuffer FilePath (Maybe Phase)

— ^ This entry loads the data from the provided buffer but acts like

– it was loaded from a file. The path is then used to inform GHC where

– to look for things like Interface files.

– This Target is useful when you want to prevent a client to write it’s

– in-memory buffer out to a file just to have GHC read it back in.

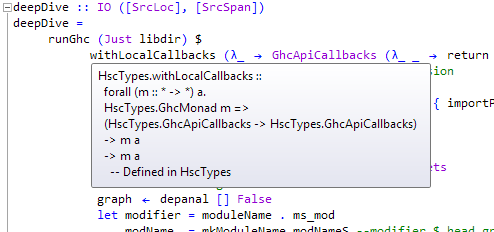

This takes the string buffer, and the original filename, the filename is only used to discover properties about the buffer (the most important of which is what type of input it is, hspp, hsc, hs or lhs) and along with this a new function was create the facilitate the create of a virtual target.

— | Create a new virtual Target that will be used instead of reading the module from disk.

– However object generation will be set to False. Since the File on disk would could

– and is most likely not the same as the content passed to the function

virtualTarget :: GhcMonad m => StringBuffer -> FilePath -> m Target

virtualTarget content file

= return (Target (TargetVirtualFile content file Nothing) False Nothing)

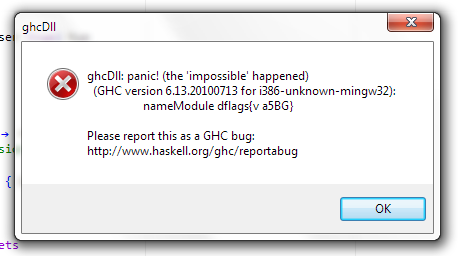

so for it’s all pretty straight forward, the rest I won’t explain since they have no bearing on how to use this new code, but what I’ve done was basically trace the workings of the load2 function, and where appropriate added some new code to instead of reading a file into a buffer, to just use the buffer passed on to virtualTarget.

The problem is, in runPhase a system process is called to deLit a Literate Haskell file which is a bit of a problem since it means that I would have to have the file on disk anyway. I decided not to worry about that for now but instead to focus on implementing the change for normal Haskell files first so I can run some benchmarking code.

Testing

Now for the actual testing

I created a new DLL file which contains the compiled code from two Haskell functions

getModInfo :: Bool -> String -> String -> String -> IO (ApiResults ModuleInfo)

and

getModStringInfo :: Bool -> String -> String -> String -> String -> IO (ApiResults ModuleInfo)

They both do the same amount of work, only the latter passes along as a target a VirtualTarget instead of a normal File target. But first it’s time to see if the changes actually did something.

This first test just asks for all the information it can get from a file named “VsxParser.hs”

Phyx@PHYX-PC /c/Users/Phyx/Documents/VisualHaskell2010/Parser

$ ghcii.sh VsxParser

GHCi, version 6.13.20100521: http://www.haskell.org/ghc/

Ok, modules loaded: VsxParser.

Prelude VsxParser> getModInfo True "VsxParser.hs" "VsxParser" ""

Success: ModuleInfo {functions = (70,[FunType {_Tspan = VsxParser.hs:256:1-8, _Tname = "showPpr’", _type = Just "Bool -> a -> String", _inline = (0,[])},FunType {_Tspan = VsxParser.hs:252:1-7, _Tname = "mkSized", _type = Just "[a] -> (Int,[a])", _inline = (0,[])},FunType {_Tspan = VsxParser.hs:244:1-17, _Tname = "configureDynFlags", _type = Just "DynFlags -> DynFlags", _inline = (0,[])},FunType {_Tspan = VsxParser.hs:237:1-19, _Tname = "createLocatedErrors", _type = Just "ErrMsg -> [ErrorMessage]", _inline = (0,[])},FunType {_Tspan = VsxParser.hs:230:1-13, _Tname = "processErrors", _type = Just "SourceError -> IO (ApiResults a)", _inline = (0,[])},FunType {_Tspan = VsxParser.hs:69:1-4, _Tname = "main", _type =

(content goes on and on and on)

the content will go on for many more pages so I won’t post that

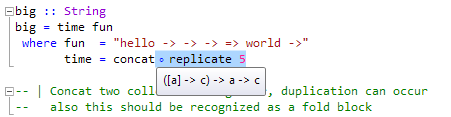

now, We call getModStringInfo asking for information about the same file, but this time we pass along a much smaller and completely different buffer than the one of the file on disk. namely a module with just 1 function “foo = 5”

Prelude VsxParser> getModStringInfo True "module VsxParser where\nfoo = 5\n" "VsxParser.hs" "VsxParser" ""

Success: ModuleInfo {functions = (1,[FunType {_Tspan = VsxParser.hs:2:1-3, _Tname = "foo", _type = Just "Integer", _inline = (0,[])}]), imports = (1,[Import {_Ispan = Implicit import declaration, _Iname = "Prelude", _pkg = Nothing, _source= False, _qual = False, _as = Nothing, _hiding = (0,[])}]), types = (0,[]), warnings = (0,[])}

As can be seen it used the content of the buffer and not the file to perform the analysis. So far so good. The new virtualTarget seems to be working just fine. Now comes the part you’ve all been waiting for, the numbers!

Benchmarking

First the setup, The two methods above as already mentioned were compiled to a static DLL. The tests are written in C# and the full code for it is as follows (you can skip this if it doesn’t interest you). Also my laptop harddrive is just 5400rpm so please keep this in mind 🙂

static void Main(string[] args)

{

Console.WriteLine("Running Benchmarks....");

int length = 500;

List<Double> stringB = new List<double>();

List<Double> fileB = new List<double>();

string content = File.ReadAllText("VsxParser.hs");

Console.Write("Press [enter] to start In-Memory buffer test");

Console.ReadLine();

Console.WriteLine("Running String tests...");

unsafe

{

for (int i = 0; i < length; i++)

{

DateTime start = DateTime.Now;

WinDll.Parser.getModInfoByString(true, content, "VsxParser.hs", "VsxParser", "");

stringB.Add(DateTime.Now.Subtract(start).TotalMilliseconds);

}

}

Console.Write("Press [enter] to start File based test");

Console.ReadLine();

Console.WriteLine("Running File tests...");

unsafe

{

for (int i = 0; i < length; i++)

{

DateTime start = DateTime.Now;

string path = Path.ChangeExtension(System.IO.Path.GetTempFileName(), Path.GetExtension("VsxParser.hs"));

File.WriteAllText(path, content);

WinDll.Parser.getModuleInfoByPath(true, "VsxParser.hs", "VsxParser", "");

File.Delete(path);

fileB.Add(DateTime.Now.Subtract(start).TotalMilliseconds);

}

}

Console.WriteLine("Writing results...");

StreamWriter fs1 = File.CreateText("stringB.csv");

for (int i = 0; i < length; i++)

{

fs1.Write(stringB[i].ToString());

if (i < length - 1)

fs1.Write(", ");

}

fs1.Write(fs1.NewLine);

fs1.Close();

fs1 = File.CreateText("fileB.csv");

for (int i = 0; i < length; i++)

{

fs1.Write(fileB[i].ToString());

if (i < length - 1)

fs1.Write(", ");

}

fs1.Write(fs1.NewLine);

fs1.Close();

Console.WriteLine("Done.");

}

Aside from this, I’ve also used the Windows performance monitor to monitor haddrive activity. Both methods are ran 500times and all the times are measured in the milliseconds range.

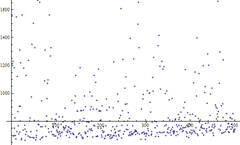

Results

first off, the intuition was right, Windows observed a 100% disk cache hit for the duration of the test. So the files were always in cache. So the expected difference shouldn’t be that big (or should it?)

| |

String buffer |

File Buffer |

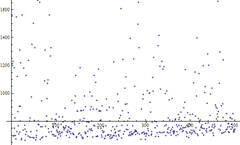

| Overview |

|

|

| Performance clustering |

|

|

| Geometric mean |

636.594594942071087ms |

666.617770612978724ms |

| Variance |

1436.0ms |

1336.56ms |

| Mean Deviation |

26.6618ms |

27.1434ms |

The two charts reveal that they both performed in about the same ranges on average with no real noticeable difference. You have to remember that these numbers are in milliseconds (ms). The difference in Geometric means is almost negligible 30.02317567090764ms

Viewing both charts together we see a significant overlap which means on average the one doesn’t perform all that better from the other on normal disk usage.

But what happens when we have abnormal disk usage? What if the drive was so busy that the disk cache starts missing. Would the File based approach still perform “good enough” ?

To simulate high disk usage I used a Microsoft tool called SQLIO, it’ official use is to find disk performance capacity, but it does so by stressing the drive, so it works fine for us. (get it at http://www.microsoft.com/downloads/details.aspx?displaylang=en&FamilyID=9a8b005b-84e4-4f24-8d65-cb53442d9e19)

This is ran from a batch file in two modes read and write for about 36sec per mode. which should cover the length of 1 test. The batch file used is

sqlio -kW -s10 -frandom -o8 -b8 -LS -Fparam.txt

sqlio -kW -s36 -frandom -o8 -b64 -LS -Fparam.txt

sqlio -kW -s36 -frandom -o8 -b128 -LS -Fparam.txt

sqlio -kW -s36 -frandom -o8 -b256 -LS -Fparam.txt

sqlio -kW -s36 -fsequential -o8 -b8 -LS -Fparam.txt

sqlio -kW -s36 -fsequential -o8 -b64 -LS -Fparam.txt

sqlio -kW -s36 -fsequential -o8 -b128 -LS -Fparam.txt

sqlio -kW -s36 -fsequential -o8 -b256 -LS -Fparam.txt

sqlio -kR -s36 -frandom -o8 -b8 -LS -Fparam.txt

sqlio -kR -s36 -frandom -o8 -b64 -LS -Fparam.txt

sqlio -kR -s36 -frandom -o8 -b128 -LS -Fparam.txt

sqlio -kR -s36 -frandom -o8 -b256 -LS -Fparam.txt

sqlio -kR -s36 -fsequential -o8 -b8 -LS -Fparam.txt

sqlio -kR -s36 -fsequential -o8 -b64 -LS -Fparam.txt

sqlio -kR -s36 -fsequential -o8 -b128 -LS -Fparam.txt

sqlio -kR -s36 -fsequential -o8 -b256 -LS -Fparam.txt

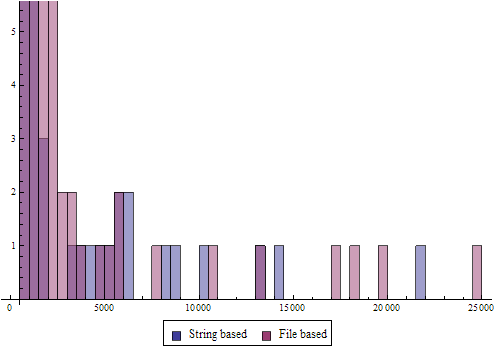

Running SQLIO and the benchmarks again the first thing that catches my eyes is that

disk cache performance has dropped from 100% to

Looking at the dataset I see a lot of completely missed caches. Also remember that the SQLIO is also reading data,

so the disk cache activity also represents it’s reads and not just those of the benchmark as before. So let’s analyze the numbers like before

| |

String buffer |

File Buffer |

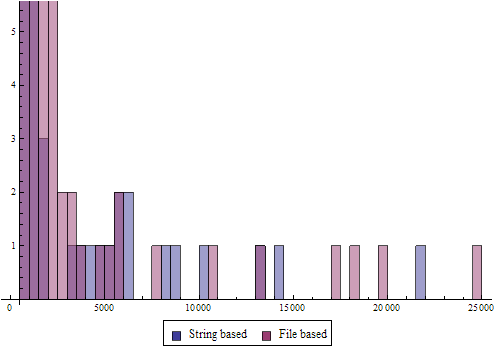

| Overview |

|

|

| Performance clustering |

|

|

| Geometric mean |

883.339857882210200ms |

904.505721026752581ms |

| Variance |

2.26107*10^6ms |

3.81543*10^6ms |

| Mean Deviation |

449.206ms |

611.77ms |

These results are quite surprising since the difference in mean now is just 21.16586314454238ms. So under heavy load they start to converge to the same performance level.

So while both have some significant outliers, both perform on average in the same category. It’s sad and hard to admit, but Unless I made a mistake when implementing the VirtualFile (which very well might be true) having a String vs File buffer doesn’t really make a big difference mostly due to the OS’s management of I/O and the disk caching going on.

In fact under heavy loads Windows started to use more and more “Fast Reads” and “Fast Writes” These require much less kernel and user mode calls than a full I/O call, so even that advantage was negated somewhat under heavy load, while the overhead of marshalling the string hasn’t changed, which might explain the smaller difference in Geometric mean in the second benchmark.

So the conclusion of the story is is, for now, there’s nothing to gain from the calls on the C# side, but maybe there is in the processing of the result but that’s saved for another time :). Up next, optimizing the Haskell Code to gather more complete module information and do it faster!.