One of the problems I’ve been struggling with for a while now is the presence of pesky memory leaks in Visual Haskell. Hs2lib has one convention, It doesn’t free any memory, and so you’re responsible for freeing all memory.

As far as I knew, I was freeing any and all pointers that I had. It should not be leaking, but yet it was. So I decided to get to the root of this problem. I wrote a simple application that uses the Lexer classes of Visual Haskell and would emulate a user scrolling by feeding it lines of a Haskell file one at a time.

Using the Debug Diagnostics Tools I was able to track the application and make a full memory dump every few seconds in order to track the progression of the leak. The results were rather surprising:

WARNING – DebugDiag was not able to locate debug symbols for HsLexer.dll, so the reported function name(s) may not be accurate.

HsLexer.dll is responsible for 11.95 MBytes worth of outstanding allocations. The following are the top 2 memory consuming functions:

HsLexer!HsEnd+1343ac4: 8.20 MBytes worth of outstanding allocations.

HsLexer!HsEnd+150ae89: 2.00 MBytes worth of outstanding allocations.

This was detected in LexerLeakTest.exe__PID__4660__Date__07_04_2011__Time_03_20_30PM__18__Leak Dump – Private Bytes.dmp

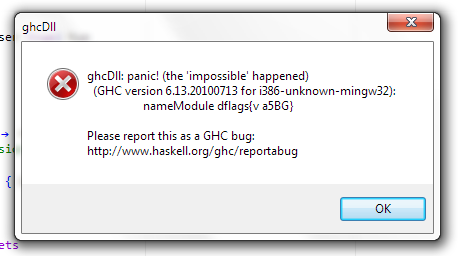

So according to this tool, my little program was leaking quite extensively and not surprisingly, it was all coming from my inside my Haskell program. Unfortunately, GHC/GCC can not produce the proper symbols (.pdb files) for any of the Microsoft debugging tools to understand. So while we know conclusively that the program is leaking, we don’t know where.

Hs2lib-Debug

This is where the new version of Hs2lib comes into play. The idea is to track any and all allocations and de-allocations made during the run of the program, in essence a simple profiler.

For this reason we now have Debug versions of the of modules. Through the magic of CPPHS, Cabal and custom preprocessors we get the usual “release” modules and “debug” modules which write the allocations to a file.

I’ll skip over the implementation of that, but the idea is to override all allocation functions with a custom one. The structure which is being written out to disk (the current version uses the rather slow show/read instances generated by GHC. I will be replacing these in the future) is:

<br />

data MemAlloc = MemAlloc { memFun :: Caller<br />

, memStack :: Stack<br />

, memStart :: Address<br />

, memStop :: Maybe Address<br />

, memSize :: Maybe MemSize<br />

, memTime :: String<br />

}<br />

| MemFree { memStack :: Stack<br />

, memStart :: Address<br />

, memSize :: Maybe MemSize<br />

, memTime :: String<br />

}<br />

deriving (Show, Read)<br />

The allocations get written out to a file called “Memory.dump”.

A piece of one such file is:

<br />

MemAlloc { memFun = Record<br />

, memStack = Then "WinDll\\Lib\\/NativeMapping_Base.cpphs:242(peekCWString)"<br />

(Then "HsLexer.hs:85(fromNative)"<br />

(Then "HsLexer.hs:83(lexSourceStringWithExtA)"<br />

Empty))<br />

, memStart = 43309024<br />

, memStop = Just 43309026<br />

, memSize = Just 2<br />

, memTime = "1312779866"<br />

}<br />

MemFree { memStack = Then "WinDll\\Lib\\/NativeMapping_Base.cpphs:246(freeCWString)"<br />

(Then "HsLexer.hs:85(fromNative)"<br />

(Then "HsLexer.hs:83(lexSourceStringWithExtA)"<br />

Empty))<br />

, memStart = 43309024<br />

, memSize = Just 2<br />

, memTime = "1312779866"<br />

}<br />

From this you can see that not only does it record allocation, but I’ve also implemented a simple artificial stack, that shows us where the allocation is/originated. The current implementation is rather simplistic. I will look into expanding this later, but for now it suits my needs.

For those wondering, this tracker is not enabled by default. To enable it just pass the “- -debug” flag to the call to Hs2lib. Compiling with this flag instructs the library to use the Debug version of the libraries, and changes the code generators so that they also add extra information to the generated code.

Since de-allocations also have to be tracked, using this flag also exposes a free function which should be used to free pointers. If the CallingConvention used is stdcall then a function “freeS” is exported, if ccall then “freeC” is exported. The reason for this is because these functions are statically defined. They aren’t being generated by the tool but are instead part of the library part of the tool.

Performing analysis

Once we have a .dump file, the next step is to analyze this information. This is where the new tool Hs2lib-debug comes in. This tool replays the allocations in a pseudo heap. If all goes well, at the end the heap should be empty. If it has any entries it means we’ have a leak.

Invoking it is quite easy, just pass it as an argument the folder which contains the dump file:

Hs2lib-debug.exe -v .\MemDumps

and that’s all.

Running this on the dump file created from the lexer program returned:

*** Program starting up…

*** Reading file ‘.\MemDumps\Memory.dump’…

*** Found 31890 record(s).

*** Analyzing [********************] 100.00%

*** Found 1135 outstanding allocation(s).

1135 unfreed references found originating from HsLexer.hs:85(fromNative)\;\;\WinDll\Lib\/NativeMapping_Base.cpphs:242(peekCWString)\;

*** Cleaning up….

Done.

The messy output for the stack is a as follows: Entries in the path are separated by a ;, instead of the usual \ character.

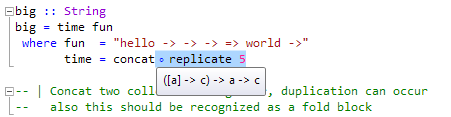

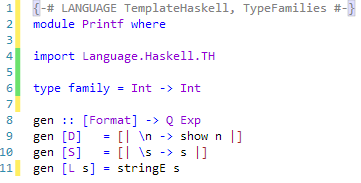

The function the profiler pointed out is:

<br />

lexSourceStringWithExtA :: StablePtr (IORef [ExtensionFlag]) -> CWString -> IO ((StatelessParseResultPtr (Located Token)))<br />

lexSourceStringWithExtA a1 a2 =<br />

do let st = newStack (__FILE__ ++ ":" ++ (show __LINE__) ++ "(lexSourceStringWithExtA)")<br />

a1p <- fromNative (pushStack st (__FILE__ ++ ":" ++ (show __LINE__) ++ "(fromNative)")) a1<br />

a2p <- fromNative (pushStack st (__FILE__ ++ ":" ++ (show __LINE__) ++ "(fromNative)")) a2<br />

res <- lexSourceStringWithExt a1p a2p<br />

toNative st res<br />

And the exact line is

<br /> a2p <- fromNative (pushStack st (__FILE__ ++ ":" ++ (show __LINE__) ++ "(fromNative)")) a2<br />

This is interesting for a couple of reasons., The profiler is saying that the pointer associated with the CWString (which is defined as a Ptr CWchar) is never freed. But why not.

The answer lies in the C# Marshaller and types being used. We are currently Marshalling C# strings using the String datatype. Strings in C# are immutable, so once the marshaller creates a wchar_t* from the string, it never worries about it again. They are strictly an in parameter.

There are two ways to solve this:

- C# does have mutable strings, using the StringBuffer type. Using StringBuffer has the benefit that it already is implemented as a char pointer. The marshaller simply passes the pointer to the Haskell function and upon. After the function returns and the GC determines that the StringBuffer is no longer in use, it should free the memory (at least in theory).

- Make the library just free any CWString it dereferences.

For now I’ve chosen the second approach, for no other reason other than it requires the least amount of change in existing code. In the future I’ll adopt the approach that Hs2lib will free any arguments being passed to it. I don’t know if this is the convention usually used. If someone has something against this approach I would love to hear it.

Update : There’s somewhat of a big gotcha that I’ve recently discovered. We have to remember that the String type in .NET is immutable. So when the marshaller sends it to out Haskell code, the CWString we get there is a copy of the original. We have to free this. When GC is performed in C# it won’t affect the the CWString, which is a copy.

The problem however is that when we free it in the Haskell code we can’t use freeCWString. The pointer was not allocated with C (msvcrt.dll)’s alloc. There are three ways (that I know of) to solve this.

- use char* in your C# code instead of String when calling a Haskell function. You then have the pointer to free when you call returns, or initialize the pointer using fixed.

- import CoTaskMemFree in Haskell and free the pointer in Haskell

- use StringBuilder instead of String. I’m not entirely sure about this one, but the idea is that since StringBuilder is implemented as a native pointer, the Marshaller just passes this pointer to your Haskell code (which can also update it btw). When GC is performed after the call returns, the StringBuilder should be freed.

I’ve once again updated the library to not free any pointers. This prevents a nasty heap corruption. To not get memory leaks in c# you are free to choose between solution 1 & 3 presented here.

Results

With this new code in place, I once again run the profiled lexer to generate a dump. This time when I analyze the result however I get

*** Program starting up…

*** Reading file ‘.\MemDumps\Memory.dump’…

*** Found 33027 record(s).

*** Analyzing [********************] 100.00%

*** Found 0 outstanding allocation(s).

Congratulations, No memory leak(s) detected.

*** Cleaning up….

And so the memory leaks are fixed. It’s worth noting that the analyzers can be quite slow. It uses a flat LinkedList like structure and lists to do the analysis. I will in future versions be replacing these with a Tree like structure and arrays respectively.